Overview

The Google Cloud Storage (GCS) connector provides a convenient way of exporting data directly from the Decentriq platform to a GCS bucket.

See here for more information about importing audiences into Permutive.

Prerequisites

- Have a Google Cloud account.

- Have an existing Cloud Storage bucket where data can be uploaded.

- Have existing service account credentials.

Step-by-step guide

Follow the steps to select a dataset for export and choose Google Cloud Storage from the list of connectors.

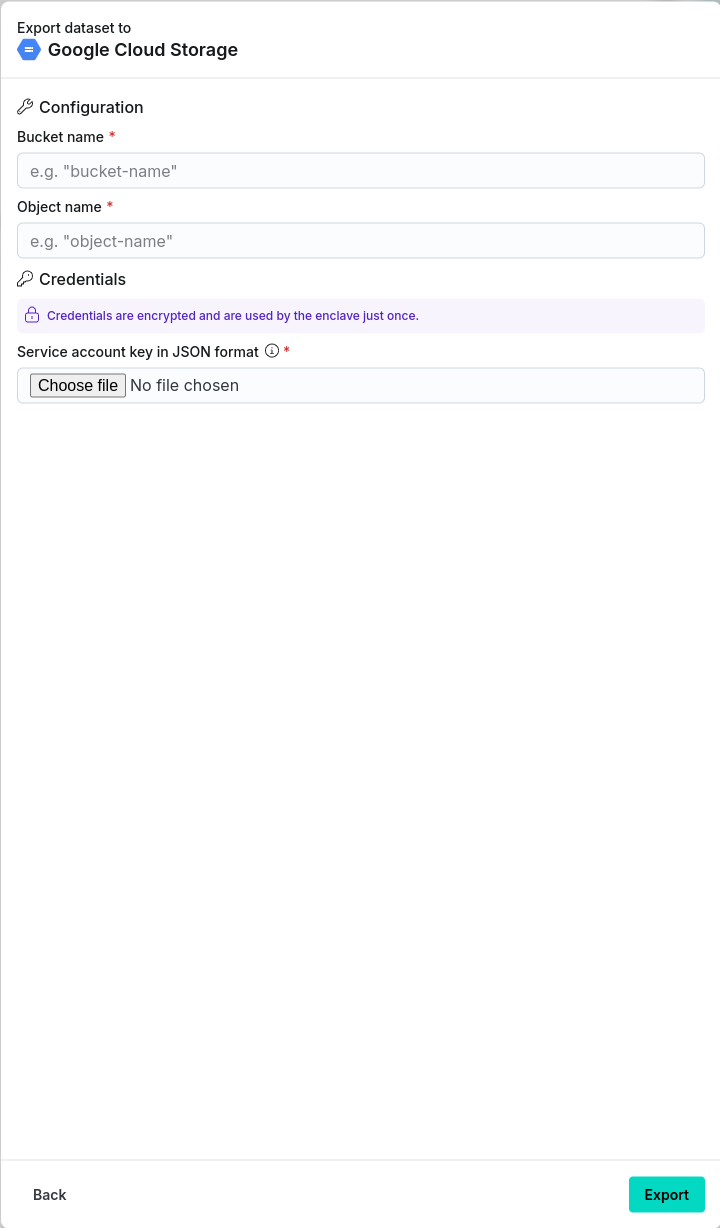

Input the requisite information (to retrieve bucket related information see here):

- Bucket name: Name of the GCS bucket where the data should be exported.

- Object name: Dataset name when uploaded to the bucket.

- Credentials: The service account credentials associated with the Google Cloud account (see the Google documentation for more details). This takes the form of a JSON file generated when setting up the service account and contains the following information:

- type: Identifies the type of credentials (this will be set to service_account).

- project_id: The ID of your Google Cloud project.

- private_key_id: The identifier for the private key.

- private_key: The actual private key used for authentication - in PEM format.

- client_email: The email address of the service account.

- client_id: A unique identifier for the service account.

- auth_uri: The URL to initiate OAuth2 authentication requests.

- token_uri: The URL to retrieve OAuth2 tokens.

- auth_provider_x509_cert_url: The URL to get Google's public certificates for verifying signatures.

- client_x509_cert_url: The URL to the public certificate for the service account.

- universe_domain: The domain of the API endpoint

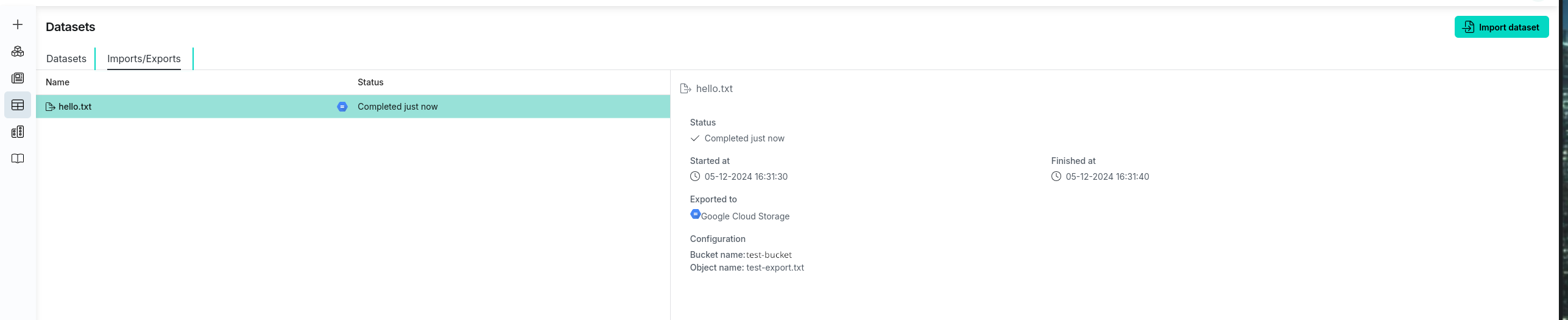

After clicking the Export button navigate to the Imports/Exports tab on the Datasets page to track the status of the export.

Once completed, the dataset will be accessible in the specified GCS bucket.