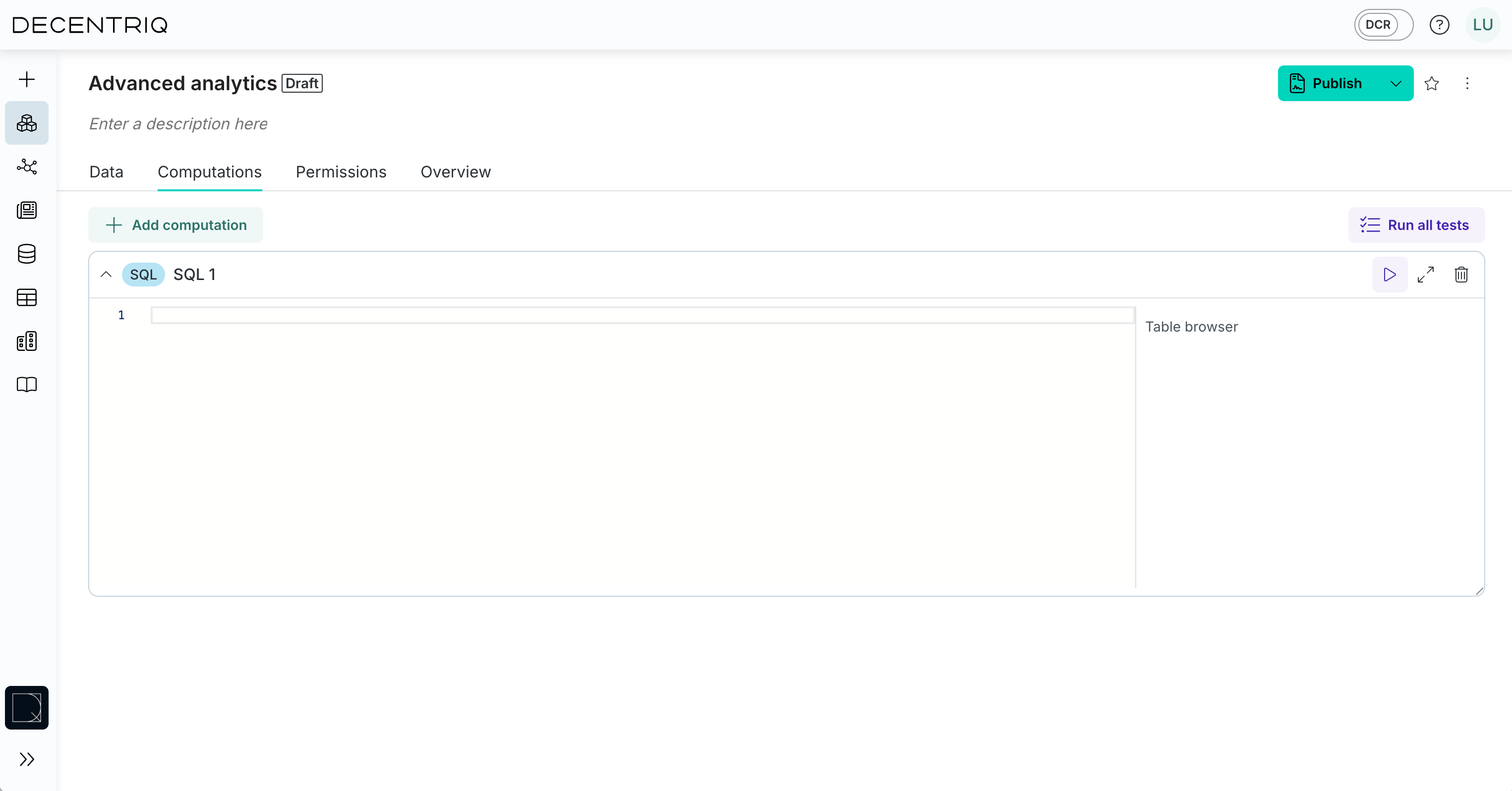

SQL computation

The SQL computation executes SQLite.

Please refer to the official SQLite documentation for details of the supported syntax and functions.

The SQL can reference data from Table datasets in the FROM statement. Assuming a Table dataset table1 exists, you can write:

SELECT sum(col_a) AS sum_col_a

FROM table1