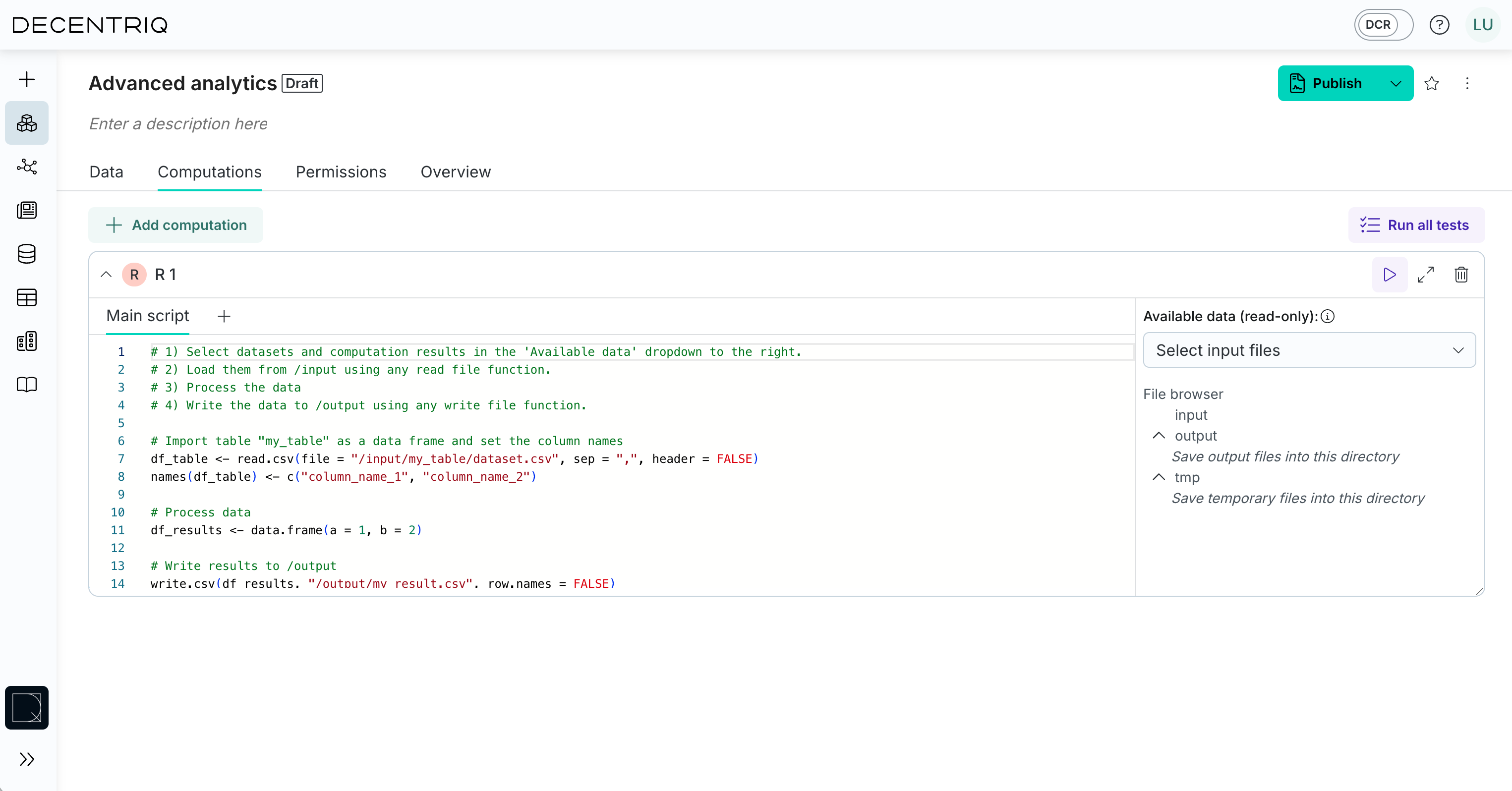

R computation

The R computation reads from /input and writes results to /output.

It can execute standard R 4.3.2 code with the following supported libraries.

Library versions

Hmisc (5.1.1)pROC (1.18.4)ggplot2 (3.4.4)ggmosaic (0.3.3)dplyr (1.1.3)ggforce (0.4.1)lubridate (1.9.3)lme4 (1.1.34)coxme (2.2.18.1)cmprsk (2.2.11)msm (1.7)randomForestSRC (3.2.2)survminer (0.4.9)tidyverse (2.0.0)corrplot (0.9.2)readxl (1.4.3)lime (0.5.3)e1071 (1.7-13)effects (4.2-2)lmtest (0.9-40)AER (1.2-10)sandwich (3.0-2)vcd (1.4-11)mclust (6.0.0)lcmm (2.1.0)openxlsx (4.2.5.2)xgboost (1.7.5.1)conflicted (1.2.0)factoextra (1.0.7)naniar (1.0.0)

The list of available libraries is not exhaustive.

Note that by default the R computations cannot access the network or internet. Also note that GPU-computations are currently not supported.

Input

The R computation can access data from datasets and computations which have been listed as its dependencies in the Available data section from the /input directory.

The paths differ based on the type of the dependency:

- A Table dataset

Table 1can be loaded as/input/Table 1/dataset.csv - A File dataset

File 1can be loaded as/input/File 1 - The results of a depending Python or R computation can be loaded under

/input/<computation name>/<filename>the same way those results have been written to/output/<filename>in the depending computation - The results of a SQL computation can be loaded under

/input/<computation name>/dataset.csv

Tmp

Computations can write intermediate results to a /tmp directory. Those files will not be part of the output.

Output

The computation should write results to the /output directory. The results as well as any text streamed to stdout are accessible by all users with data analyst permission on the computation.