Datasets

import decentriq_platform.legacy as dq

import decentriq_platform.legacy.sql as dqsql

USER_EMAIL = "@@ YOUR EMAIL HERE @@"

OTHER_EMAIL = "@@ OTHER EMAIL HERE @@"

API_TOKEN = "@@ YOUR TOKEN HERE @@"

client = dq.create_client(USER_EMAIL, API_TOKEN)

enclave_specs = dq.enclave_specifications.versions([...])

auth, _ = client.create_auth_using_decentriq_pki(enclave_specs)

session = client.create_session(auth, enclave_specs)

builder = dq.DataRoomBuilder("My DCR", enclave_specs=enclave_specs)

data_node_builder = dqsql.TabularDataNodeBuilder(

"salary_data",

schema=[

("name", dqsql.PrimitiveType.STRING, False),

("salary", dqsql.PrimitiveType.FLOAT64, False)

]

)

data_node_builder.add_to_builder(

builder,

authentication=client.decentriq_pki_authentication,

users=[USER_EMAIL]

)

data_node_builder.add_to_builder(

builder,

authentication=client.decentriq_pki_authentication,

users=[USER_EMAIL]

)

FILE_NODE_ID = builder.add_data_node("file_node", is_required=True)

builder.add_user_permission(

email=USER_EMAIL,

authentication_method=client.decentriq_pki_authentication,

permissions=[

dq.Permissions.update_data_room_status(),

dq.Permissions.leaf_crud(FILE_NODE_ID),

]

)

dcr = builder.build()

TABLE_NODE_ID = data_node_builder.table_name # e.g. "salary_data"

DCR_ID = session.publish_data_room(dcr)

List datasets and get details

datasets = client.get_available_datasets()

# Each such dataset is a dictionary:

dataset_name = datasets[0]["name"]

manifest_hash = datasets[0]["manifestHash"]

client.get_dataset(manifest_hash)

Provision datasets via SDK

To perform the dataset operations below, please follow the steps in the Get started with Python SDK tutorial to instantiate the client and establish a session with the Decentriq platform.

Upload a dataset via the SDK and continue in the Decentriq UI

If your goal is to use the SDK only to encrypt and upload datasets, and then continue your workflow in the Decentriq UI, for example:

- Create or participate as a Data Owner to a DCR in the Decentriq UI

- Upload datasets via the SDK for better control and performance

- Access the Decentriq UI and provision the dataset to one or more DCRs

# Generate an encryption key

encryption_key = dq.Key()

# Read dataset locally, encrypt, upload and provision it to DCR

with open("/path/to/dataset.csv", "rb") as dataset:

dataset_id = client.upload_dataset(

dataset,

encryption_key,

"dataset_name",

)

Note: (Publishers only) use this method to upload a dataset and then provision it to a Datalab via the Decentriq UI.

Check the examples below to perform not only the upload but also the provisioning of datasets directly from the SDK.

Upload and provision a dataset to a Data Science DCR

Tabular datasets (.CSV files) to table nodes:

# Generate an encryption key

encryption_key = dq.Key()

# Read dataset locally, encrypt, upload and provision it to DCR

with open("/path/to/dataset.csv", "rb") as tabular_dataset:

dataset_id = dq.data_science.provision_tabular_dataset(

tabular_dataset,

name="dataset_name",

session=session,

key=encryption_key,

# Data Clean Room ID copied from Decentriq UI

data_room_id=DCR_ID,

# Table ID copied when hovering over it in the Decentriq UI

data_node=TABLE_NODE_ID,

)

Unstructured (or “raw”) datasets (.JSON, .TXT, .ZIP, etc) to file nodes:

# Generate an encryption key

encryption_key = dq.Key()

# Read dataset locally, encrypt, upload and provision it to DCR

with open("/path/to/file.json", "rb") as raw_dataset:

dq.data_science.provision_raw_dataset(

raw_dataset,

name="My Dataset",

session=session,

key=encryption_key,

# Data Clean Room ID copied from Decentriq UI

data_room_id=DCR_ID,

# File ID copied when hovering over it in the Decentriq UI

data_node=FILE_NODE_ID,

)

Provision an existing dataset to a Data Clean Room via the SDK

with open("/path/to/dataset.csv", "rb") as dataset:

DATASET_ID = client.upload_dataset(

dataset,

encryption_key,

"dataset_name",

)

Assuming you already uploaded the dataset using the Decentriq UI, then you can provision the dataset to a DCR as follows.

# Retrieve the encryption key

# DATASET_ID is an id copied from the Decentriq UI "Datasets" page or

# from the list of datasets retrieved via the SDK.

retrieved_key = client.get_dataset_key(DATASET_ID)

# Reprovision the existing dataset to a DCR

session.publish_dataset(

# DCR ID copied from Decentriq UI

DCR_ID,

DATASET_ID,

# Table or File ID copied when hovering over it in the Decentriq UI

FILE_NODE_ID,

retrieved_key

)

Deprovision and delete datasets via SDK

Deprovision

# Deprovision the dataset from the Table or File node

session.remove_published_dataset(

# DCR ID copied from Decentriq UI

DCR_ID,

# Table or File ID copied when hovering over it in the Decentriq UI

FILE_NODE_ID

)

Delete

Before deleting a dataset from the Decentriq platform, please make sure it is deprovisioned from all DCRs first, by calling the method above or doing so via the Decentriq UI.

Once it's been completely deprovisioned, it can be deleted:

# Delete the dataset from the Decentriq platform.

# DATASET_ID is an id copied from the Decentriq UI Datasets page or one

# that was retrieved via the SDK.

client.delete_dataset(DATASET_ID)

Copy IDs from the Decentriq UI to use in the SDK

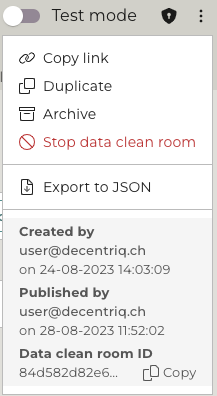

To obtain a DCR ID

Access the DCR, click on the … icon in the top-right corner, then Copy ID.

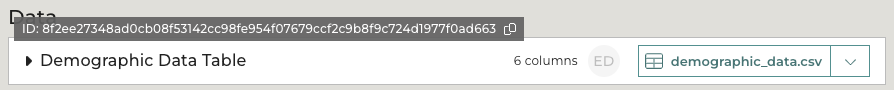

To obtain a Table or File node ID

Access the DCR, locate the Table or File node, hover the cursor over it, then Copy.

To obtain a dataset ID

Access the Datasets page, locate the dataset and copy the ID displayed at the bottom of the details panel.