Manage datasets via the SDK

Setup Script

If you want to test this functionality and don't have a clean room already set up, you can use this script to create an appropriate environment to test the rest of this guide with.

import decentriq_platform as dq

from decentriq_platform.analytics import *

USER_EMAIL = "@@ YOUR EMAIL HERE @@"

OTHER_EMAIL = "@@ OTHER EMAIL HERE @@"

API_TOKEN = "@@ YOUR TOKEN HERE @@"

client = dq.create_client(USER_EMAIL, API_TOKEN)

# build an example DCR

builder = AnalyticsDcrBuilder(client=client)

dcr_definition = builder.\

with_name("My DCR").\

with_owner(USER_EMAIL).\

with_description("My test DCR").\

add_node_definitions([

RawDataNodeDefinition(name="my-raw-data-node", is_required=True),

TableDataNodeDefinition(

name="my-table-data-node",

columns=[

Column(

name="name",

format_type=FormatType.STRING,

is_nullable=False,

),

Column(

name="salary",

format_type=FormatType.INTEGER,

is_nullable=False,

),

],

is_required=True,

),

]).\

add_participant(

USER_EMAIL,

data_owner_of=[

"my-raw-data-node",

"my-table-data-node",

]

).\

build()

dcr = client.publish_analytics_dcr(dcr_definition)

# Generate an encryption key

encryption_key = dq.Key()

# Read dataset locally, encrypt, upload and provision it to DCR

with open("/path/to/dataset.csv", "rb") as dataset:

DATASET_ID = client.upload_dataset(

dataset,

encryption_key,

"dataset_name",

)

Upload a dataset

# Generate an encryption key

encryption_key = dq.Key()

# Upload the dataset to the Decentriq platform

with open("/path/to/dataset.csv", "rb") as dataset:

dataset_id = client.upload_dataset(

dataset,

encryption_key,

"dataset_name",

)

List datasets and get details

datasets = client.get_available_datasets()

# Each such dataset is a dictionary:

dataset_name = datasets[0]["name"]

manifest_hash = datasets[0]["manifestHash"]

client.get_dataset(manifest_hash)

Deprovision and delete datasets

Deprovision a dataset

A deprovisioned dataset can no longer be used in a specific clean room, but remains available to be reprovisioned.

# Deprovision the dataset from the Table or File node

dcr.get_node("my-raw-data-node").remove_published_dataset()

Delete a dataset

A deleted dataset can no longer be provisioned to any clean rooms. Clean rooms that previously had the dataset provisioned may still have a copy cached. Therefore, before deleting a dataset from the Decentriq platform, it is best practice to deprovision it from any clean rooms using it first.

client.delete_dataset(DATASET_ID)

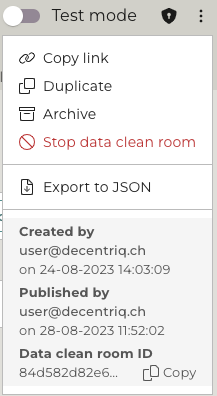

Copy IDs from the Decentriq UI to use in the SDK

To obtain a DCR ID

Access the DCR, click on the … icon in the top-right corner, then Copy ID.

To obtain a table or file node name

Use the same node name as you see in the UI.

To obtain a dataset ID

Access the Datasets page, locate the dataset and copy the ID displayed at the bottom of the details panel.